The Paradox of Innovation: How Technology is Simultaneously Helping and Hurting the Environment

September 8, 2022 / Suzanne Taylor

Not long ago, the cost of energy in Plattsburgh, N.Y., a small town near the Canadian border, was one of the lowest in the U.S. That changed when Plattsburgh became a boom town for bitcoin.

The cheap power lured bitcoin miners to the town, where they set up shop in strip malls, installing thousands of mining machines that consumed significant amounts of electricity. According to the Digiconomist's Bitcoin Energy Consumption Index, one bitcoin transaction uses 1,518.41 kWh of electrical energy —the equivalent of an average U.S. household's power consumption of more than 52 days. Crypto mining's annualized carbon footprint totals 78.35 metric tons of CO2, comparable to Oman's carbon footprint.

The crypto mining operations in Plattsburgh spiked electricity prices for residents, and the city's infrastructure could not take it. This scenario has been repeated in other towns where energy prices run low and bitcoin opportunists run high.

This example illustrates how innovation often has unintended consequences. Therefore, in our quest to innovate and push technology to its limits, we must do so responsibly.

Technology is accelerating the decentralization of the economy, which distributes decisions among various organizations, leaving the economy's stability vulnerable to the law of supply and demand — making the consequences of computing more prevalent. Technology is emerging right now from different sectors — some with a conscious regard for environmental impact and some not. The direct correlation between technological advances and environmental impacts has led people to create ways to mitigate and solve these challenges.

But there is a paradox: many of the innovations being developed by the tech industry can be applied to mitigate environmental issues, but they are not free from environmental costs.

Blockchain and compute power

Blockchain — the foundation for cryptocurrency — is a paradox. This technology can be applied to several use cases that benefit society, but any blockchain application requires a significant amount of compute power.

These benefits include allowing the supply chain industry to boost sustainable practices by enabling product transparency and efficient management to optimize traceability and integrity. According to Gartner, blockchain has optimized several sustainable innovations, including green transportation and logistics management, tokenized incentives and rewards for net zero activities and authenticating and certifying carbon credits and offsets. Blockchain has also helped the construction industry calculate embodied and operating carbon, helping optimize carbon management, carbon trading and proper energy management.

But for all its bonuses, blockchain requires high compute power because miners are trying to solve complex cryptographic puzzles to verify the transaction. And just as in the cryptocurrency example in Plattsburgh, the compute power for blockchain applications comes at a cost.

Is AI a problem and a solution?

AI supports several environmentally positive ventures: self-driving electric cars, emissions and energy use optimization, endangered species tracking, agricultural product and crop optimization, and smart energy grid optimization. But these optimistic outcomes come at a cost. The compute power required to train an AI algorithm and neural networks is significant.

Is it worth it if it takes an increasing amount of energy to train AI to use data to optimize energy use and emissions?

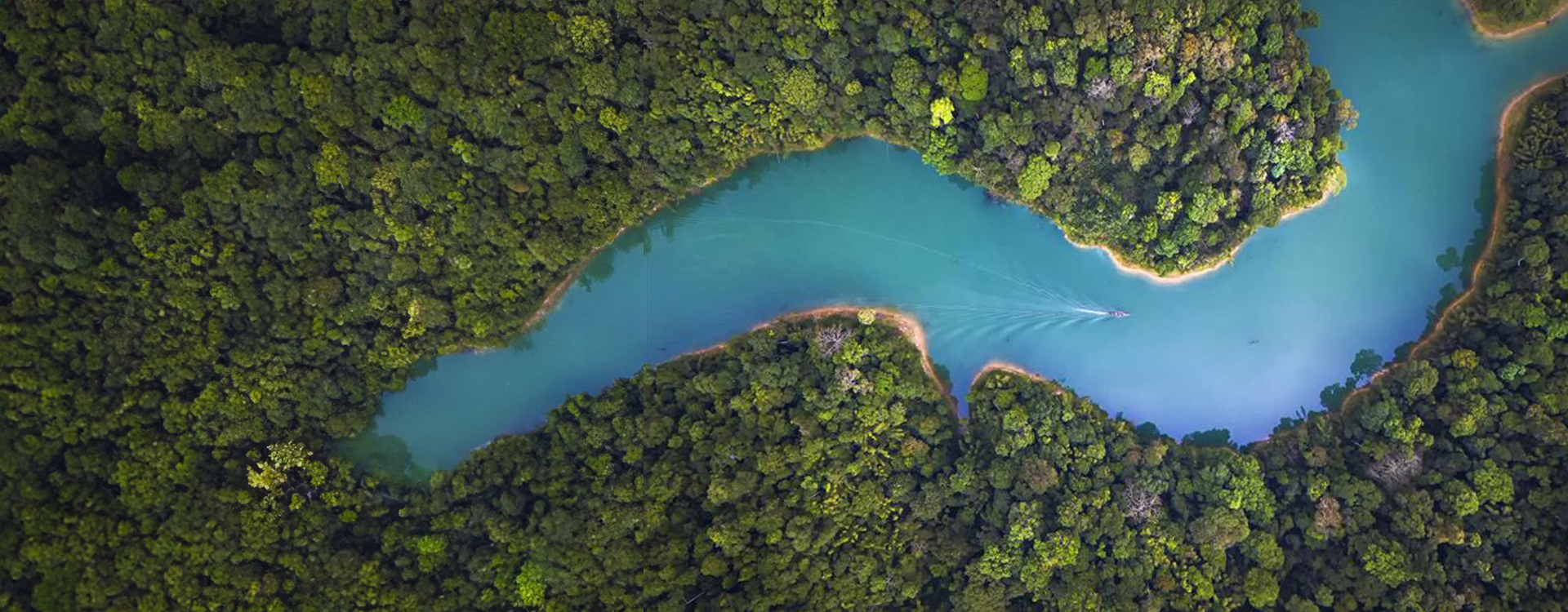

AI and machine learning requires a lot of compute power, which often comes from data centers. Whether the data centers are on-premises or in the cloud, data centers are one of the most energy-intensive building types — consuming 10 – 50 times the energy per floor space compared to a traditional commercial office building. Also, data centers use an incredible amount of water to cool the servers and operate the buildings. Using natural resources is directly affecting the towns where data centers are located — not unlike Plattsburgh.

The paradox continues as AI is being used to resolve this challenge. Google has applied DeepMind machine learning to their data centers to reduce the energy required to cool the spaces. In 2016, Google claimed they had reduced the amount of energy used for cooling by 40%, which equated to a 15% reduction in overall power usage effectiveness (PUE).

So, while AI is putting a strain on data centers, the technology is also helping reduce those data centers' energy use.

Balancing technology and natural resources

Blockchain and AI's required compute power uses tremendous energy and natural resources, including water and mineral metals. But so do our everyday devices.

Using these natural resources, especially metals, has great environmental impacts. The energy-intensive process of mining, smelting, exporting, assembling and transporting these materials and goods will negatively affect the environment and the communities nearby these operations — and possibly bodies of water.

Phones and computers for personal and commercial use are built using metals such as nickel, cobalt and copper. Then there's lithium — an essential resource behind rechargeable batteries. Estimates say a Tesla Model S battery pack contains about 63 kg of lithium, and as the electric vehicle market continues to grow, the availability of lithium is a concern. The rise of environmentally friendly electric vehicles will not come without a cost — yet another paradox. Even one of the earth elements behind clean technologies comes with compromises. Neodymium plays a crucial role in clean technologies. While the element is not particularly rare, it still must be mined.

Questioning quantum's environmental impact

Mainstream quantum computing is approaching the realm of possibility, but some people might not be considering the impact quantum computing will have on the environment yet. Instead, the focus is on research and development to build the first wide-scale, commercially viable quantum computer that can cost-effectively solve quantum-only problems.

Building a quantum computer costs tens of billions of dollars, and the needed operating conditions hover just a few degrees above absolute zero (-272°C or 1 kelvin). The total cost to build and operate a quantum computer begs the question of what will be the total cost of quantum data centers or if Quantum as a Service becomes a reality.

In the meantime, scientists are researching electronic components required to control the qubits — the counterpart to bits in classical computing — that do not have to work at such low temperatures, which could help offset some environmental impacts.

Another paradox is that quantum could solve chemistry problems we cannot solve now. The technology could help develop environmentally friendly materials, such as catalysts that could break down carbon-intensive processes we do not have. Because quantum's sweet spot is optimization problems — like AI — it could be used for energy optimization with more power. But at what cost?

Innovating responsibly

As technologies continue developing, we are forced to consider the environmental impacts. There will always be a tug of war between new ways to use computing to solve environmental problems and the potential impact those solutions have on the environment. It is, therefore, vital to balance innovation and solve the issues those advancements create.

To discuss how technology can help solve real-world business problems — responsibly, contact Unisys.